Differentiable Programming for Learnable Graphs: Optimizing Agent Workflows with DSPy

How learnable graphs reduce manual tuning, scale decision-making, and make LLM workflows self-improving.

In my previous post, I looked at how differentiable programming can optimize tool selection in LLM workflows — turning routing decisions into learning targets. But tool calls are just one layer. The real impact comes when we apply those same ideas to the entire workflow — not just what an agent says, but how it reasons, routes, and responds.

This post explores what it means to make workflows themselves learnable. Not just the individual modules, but the graph that governs how inputs are interpreted and decisions are made. We’ll use DSPy to trace this structure from code, transform it into an optimization surface, and turn execution into something you can tune. All the code is available on github.1

Agent frameworks are often treated as if they’re born intelligent — clean, logical graphs where each node handles a single task: classify the input, fetch a document, return a response.

In reality, they’re rarely that elegant. Most agents are stitched together in brittle, static ways, with no capacity to adapt over time.

Take customer support. It’s a domain where agents are expected to handle diverse queries with minimal structure, but where most workflows quickly devolve into hardcoded decision trees.

A more idealized version might look like this:

The user writes in: “My order is late”, “I didn’t get my drink”, “Where’s my driver?” — and the agent routes that input through the appropriate branch. If the issue is lateness, it fetches the ETA. If it’s a missing item, it verifies the claim. If it’s both, it might escalate to policy. If nothing matches, it falls back to search.

On the surface, the structure is sound: modular, predictable, easy to reason about. But beneath that order lies a deeper rigidity. The routing is fixed. The logic is brittle. And the agent doesn’t learn from experience because the LLM is hosted by a third party!

What seems like composability is just hardcoding in disguise. The graph only does what you told it to do!

This is where differentiable programming enters the picture.

Instead of defining a fixed graph, you define a program. That program can branch, loop, and call modules conditionally. More importantly, it can learn. Over time, the entire workflow becomes trainable: not just the model calls inside it, but the structure that connects them.

From Decision Trees to Programs That Learn

What makes differentiable programming interesting isn’t that it lets you build workflows — it’s that it lets those workflows learn.

The idea is simple:

Make the behavior of your system not just executable, but learnable.

DSPy is a python library that adopts this model directly. You write agents as Python programs. Each component is a Module. Each call to a module becomes a node in a call graph. DSPy then traces the entire workflow during execution and compiles it into a structure that can be trained.

What begins as regular Python becomes a dynamic, inspectable, and optimizable program.

A Routing Workflow That Can Learn

Let’s take a concrete example: a customer service agent that handles four categories of issues:

eta questions

missing items

driver issues

and a fallback for everything else.

Every incoming ticket is routed through a classification module. Based on the output, the program dispatches to one of four branches. Each branch handles its part and returns a unified response — a tag and a message body.

Because every branch returns the same format, the rest of the system doesn’t need to care how the response was generated. Logging, evaluation, and training can treat all branches the same.

Consider the following agent, which consists of five composable modules arranged in a clear decision-graph.

If you’re coming from traditional frameworks like LangGraph, Haystack or Airflow, you might notice what’s missing — how the graph comes into existence. You don’t register nodes. You don’t write add_edge.

You just write the program — and DSPy builds the graph from how it behaves.

Structure Without Rigidity

In traditional systems, structure is a constraint. You define a flow upfront, and then hope your logic fits inside it. Differential programming flips that.

DSPy, for instance, watches what your program does, and builds the structure from that. Every call to a module is an edge. Every submodule is a node. The graph isn’t explicitly declared, its implicitly constructed through the core programming language’s own execution flow.

This means you’re not limited to static paths. You can branch on values, loop through reasoning steps, or conditionally call tools — and DSPy will still know what your agent did, and when.

And once the structure is traced, it becomes a target for learning and improves over time.

Visualizing the Workflow

Because DSPy keeps track of the execution path, you can visualize what actually happened during a run. It’s not a flowchart of intentions. It’s a snapshot of behavior. The execution trace is fully inspectable. You can walk it, export it, or visualize it using standard Python tools. Here’s a gist for the code to visualize.

This doesn’t just help with debugging. It makes the workflow concrete. You’re not guessing what ran — you can see it, measure it, and optimize it. Lets see how that works.

Learning the Workflow

Alright, so how do we actually make this learning happen?

In DSPy, modules are not just structural elements — they are learning targets. Each Predict module carries internal parameters: prompt templates, few-shot examples, signature formats et al. When you compile a DSPy program with an optimizer, these parameters are tuned jointly across the entire graph.

The result is not a single tuned prompt but a learned workflow. The structure of your program is the same but its behavior is improved. Your agent becomes more accurate, more consistent, and better aligned with the underlying task.

This is the essence of differentiable programming in the DSPy model: not gradient descent through every function, but end-to-end optimization across a parameterized, composable program.

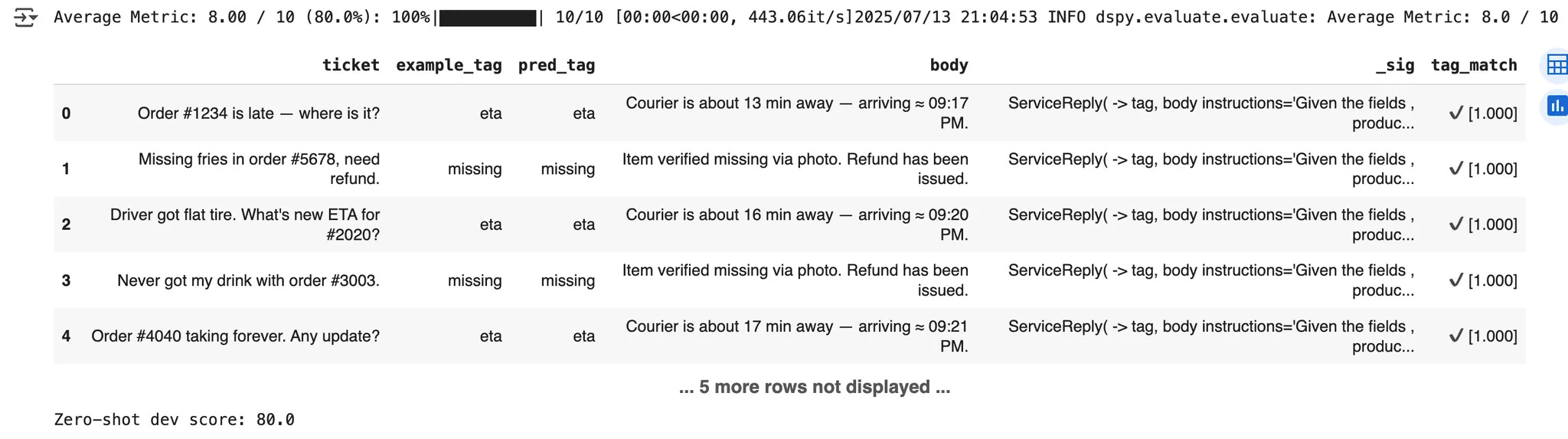

Lets take a look at how this works. First, we’ll evaluate our current agent for accuracy using a simple dataset (that I created via ChatGPT).

And here’s what it looks like

Our initial eval lands at 80% accuracy on the dev set. Not bad for a first pass, though in a production setting, especially at the scale of a ride-sharing company handling millions of tickets with noisy, incomplete data, that number will likely not be the starting point ;)

So how would we improve it? In the current world of prompt optimization, we’d likely start hand-tweaking individual prompts, testing changes in LangFuse or MLflow, and iterating manually — one node at a time.

Differentiable programming gives us a different approach.

With DSPy, we don’t have to optimize each part in isolation. Instead, we treat the entire workflow — routing, branching, messaging — as something the system can learn. The optimizer becomes a feedback loop embedded in the program itself. You give it data, and it returns a better version of your agent. One that behaves the same on the surface: but under the hood, routes smarter, reasons cleaner, and adapts to the task at hand.

and this is the resulting output

The evaluation lands at 100% (!) — which, while expected in a toy setting, still points to something deeper.

When a workflow is embedded inside a differentiable graph, execution itself becomes a target for optimization.

You define the behavior in code. DSPy traces the structure from how the program runs. And that structure — not just the outputs, but the decisions and pathways between them — becomes tunable.

You’re no longer just prompting an agent. You’re compiling a system that learns with each example it sees!

From Infrastructure to Intelligence

Most agent frameworks today function like infrastructure. They manage state, route between tools, and follow predefined steps. They’re reliable executors — but they don’t learn. They don’t adapt. They run the same way, regardless of what they see.

Differentiable programming changes that foundation. Instead of prescribing a fixed path, you define a space of behaviors — and let data shape which paths the system learns to take. Your agent becomes something more than a script. It becomes a system that can evolve.

That’s the difference between infrastructure and intelligence. And as workflows increasingly reflect judgment, not just coordination, that difference becomes essential.

This is the second post in an ongoing series on learnable graphs for LLM-powered workflows. I’ll be exploring topics like embedding tools into latent spaces, evaluating trainable models for tool use, weight-level workflow learning, and more. Follow along, and feel free to share feedback or questions as we go!

Hi Viksit, can you please explain the following:

"In traditional systems, structure is a constraint. You define a flow upfront, and then hope your logic fits inside it. Differential programming flips that.

DSPy, for instance, watches what your program does, and builds the structure from that. Every call to a module is an edge. Every submodule is a node. The graph isn’t explicitly declared, its implicitly constructed through the core programming language’s own execution flow.

This means you’re not limited to static paths. You can branch on values, loop through reasoning steps, or conditionally call tools — and DSPy will still know what your agent did, and when.

And once the structure is traced, it becomes a target for learning and improves over time."

My understanding of DSPy is that optimizes all the prompts in your workflow (by just providing the input,output pairs - not the intermediate steps) but the workflow is defined by us just how we can define a custom neural network in pytorch. But when I read what you have written, it feels as if we provide the modules to DSPy and it figures out the pipeline too. Is that correct?

Excellent post and great concepts on applying differentiable programming to agentic workflows. Does this apply just as well to multi turn complex conversations ? Also can we configure different LLMs or SLMs for each of the paths or routers. Lastly can this concept be nested to n levels for more advanced workflows ? Thanks